What is Forward Proxy Server?

A forward proxy, often called a proxy server or web proxy, is a server that sits in front of a group of machines within a same network. When these computers make requests to sites and services usually external to the network or on the Internet, the proxy server intercepts those requests and then communicates with web servers on behalf of those clients like a middleman. By doing so, it can control the traffic according to the custom policies, convert and mask client IP addresses, enforce security protocols, and block unknown traffic.

|

| Direct Communication Flow |

In the above diagram, the standard communication flow where Laptop/Phone would reach out directly to website AZ, with the client sending requests to the server and the server responding to the client request.

|

| Forward Proxy Communication Flow |

When we have a forward proxy in place, Laptop/Phone/Desktop will instead send requests to FP, which will then forward the request to website AZ. Website AZ will then send a response to FP, which will in-turn forward the response back to Laptop/Phone/Desktop.

Reasons for Forward Proxy:

Restricted Browsing

Governments, Schools and Organizations use proxy server to give their users access to a limited version of the Internet. A forward proxy can be used to implement these restrictions, as they let the user requests to go through the proxy rather than directly to the websites.

To Block Specific Content

Proxies can also be set up to block a group of users from accessing certain websites. Our office network might be configured to connect to the web through a proxy which enables content filtering, blocking the requests to social media or online streaming sites.

What is Reverse Proxy Server?

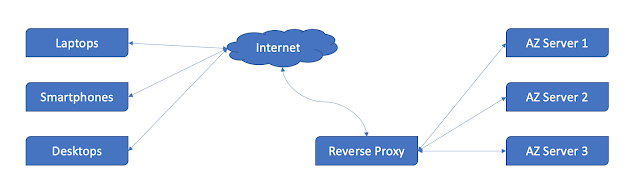

A reverse proxy is a server that sits in front of one or more web servers, intercepting requests from clients. This is different from a forward proxy, where the proxy sits in front of the clients. With a reverse proxy, when clients send requests to the origin server of a website, those requests are intercepted at the network edge by the reverse proxy server. The reverse proxy server will then send requests to and receive responses from the origin server. Unlike a traditional proxy server, which is used to protect clients, a reverse proxy is used to protect servers.

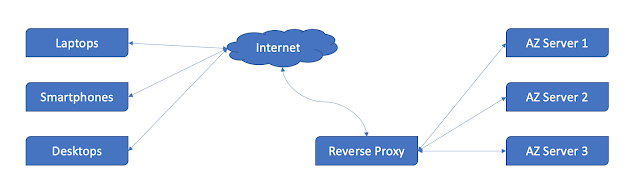

|

| Reverse Proxy Communication Flow |

A reverse proxy effectively serves as a gateway between clients, users, and application servers. It handles all the access policy management and traffic routing, and it protects the identity of the server that actually processes the request.

Reasons for Reverse Proxy:

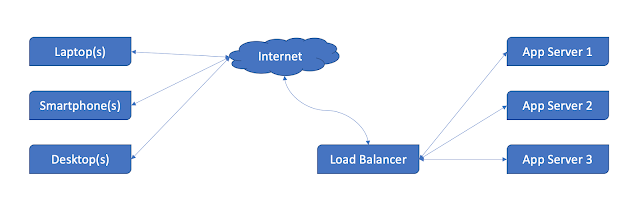

Load Balancing

A reverse proxy server can act as a traffic cop, sitting in front of your backend servers and distributing client requests across a group of servers in a manner that maximizes speed and capacity utilization while ensuring no one server is overloaded, which can degrade performance. In the event that a server fails completely, other servers can step up to handle the traffic.

Global Server Load Balancing

In this form of load balancing, a website can be distributed on several servers around the globe and the reverse proxy will send clients to the server that’s geographically closest to them. This decreases the distances that requests and responses need to travel, minimizing load times.

SSL Encryption

Encrypting and decrypting SSL (or TLS) communications for each client can be computationally expensive for an origin server. A reverse proxy can be configured to decrypt all incoming requests and encrypt all outgoing responses, freeing up valuable resources on the origin server.

Protection from Attacks

With a reverse proxy in place, a website or service never needs to reveal their server identities. Also acts as an additional defense against security attacks. This makes it much harder for attackers to leverage a targeted attack against them, such as a DDoS attack.

Caching

Reverse proxies can compress inbound and outbound data, as well as cache commonly requested content, results in boosting the performance of traffic between clients and servers.

Superior Compression

Server responses use up a lot of bandwidth. Compressing server responses (e.g. with gzip) before sending them to the client can reduce the amount of bandwidth required, speeding up server responses over the network.

Monitoring and Logging Traffic

A reverse proxy captures any requests that go through it. Hence, you can use them as a central hub to monitor and log traffic. Even if you use multiple web servers to host all your website’s components, using a reverse proxy will make it easier to monitor all the incoming and outgoing data from your site.

Cloudfare, Amazon CloudFront, Akamai, StackPath, DDos-Guard, CDNetworks etc are some of the well known reverse proxies available in the market.